Neo4j Graph Implementations

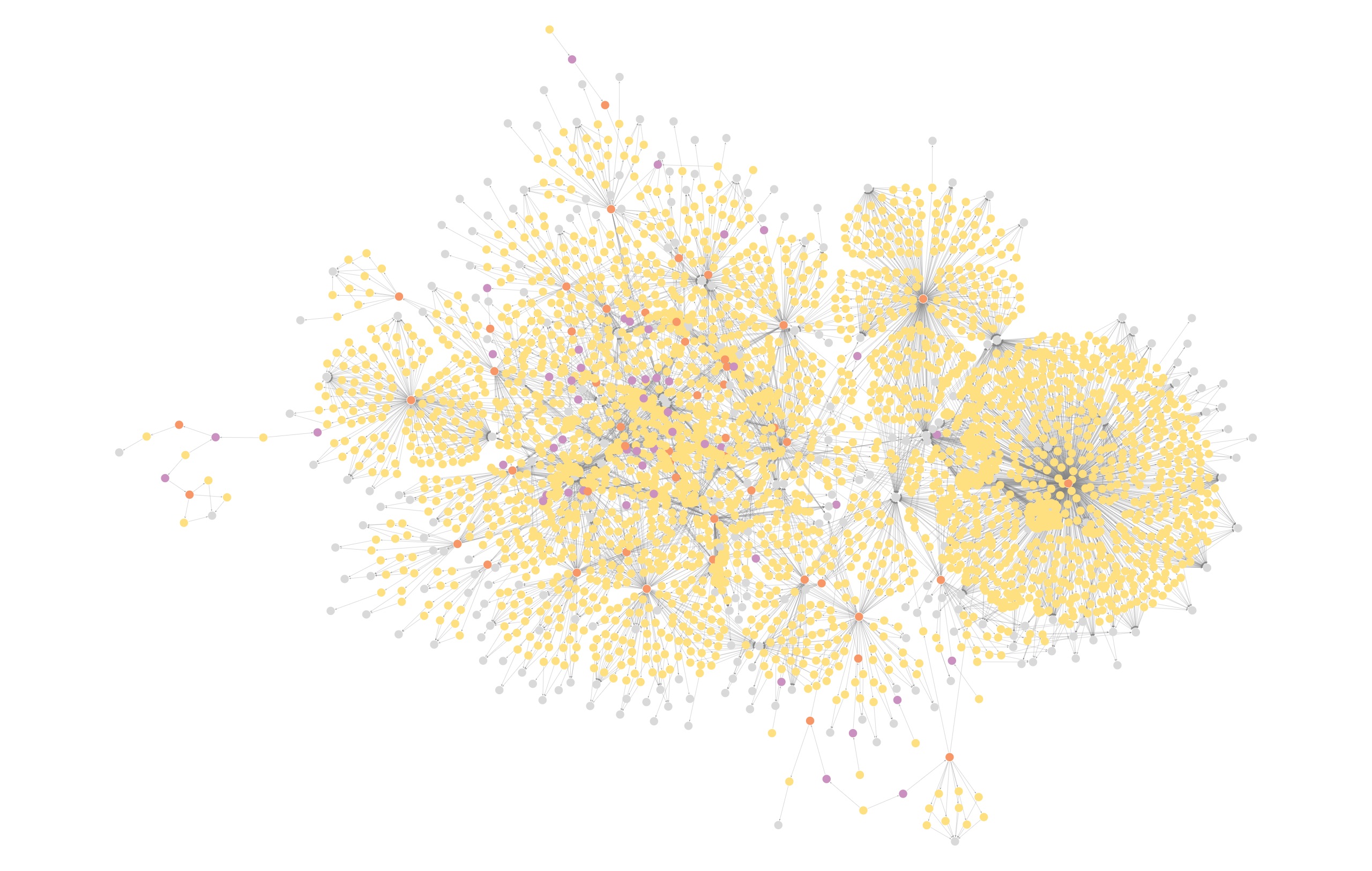

The influx of data from multiple origins be they databases or applications is amplifying the need for connected-data solutions. This confluence of integrated data sources represents the veritable sweet spot for graph implementations, where both integration and analytic traversals of these disparate data sets are design features. A critical step in framing your analytics solution is the graph data discovery process which looks at these existing cross-domain data stores and identifies key relationships and properties, along with there data quality features to establish an efficient graph data model. These deployments activities also include building out reliable and performant data pipelines that are both extendable and auditable to ensure consistency across your analytics environment. In addition, we provide your teams with training and knowledge transfer services specific to your needs, leaving you well positions to develop new features and maintain existing ones.

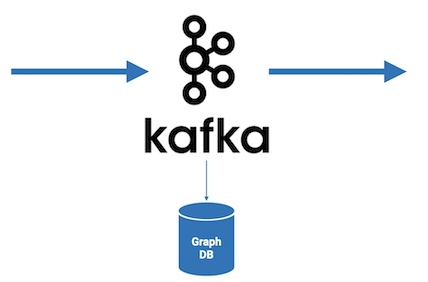

Data Streaming with Kafka

Data consistency and mobility is an age-old issue for technology solutions but with the advent of data streaming you can now introduce new real-time analytics platforms that are instantly current, opposed to the hours or days of latency common in batch ETL pipelines. Based on Data Streaming our Neo4j and Kafka solution offers a unique opportunity to deploy these new graph based analytic systems within your existing enterprise environments without disrupting existing systems or operations. These relatively new features are enabled by the advent of Kafka Connects' Change-Data-Capture (CDC) features which support low-latency replication into your graph analytics environment. This approach along with a granular services layer (GraphQL) allows enterprise applications to deploy these new analytics without overhauling existing back-end systems or data pipelines and represents a great on ramp for implementing your first graph.

Service Layer with GraphQL

GraphQL represents one of the most efficient methods for standing up your API service layer for your Neo4j graph environment. The existing Neo4j libraries and the structural symmetry between graph and API make it a great fit for deploying your connected data analytics. GraphQL also supports mutation (or full CRUD) services for graph that allows using Neo4j as the primary data store for your analytics and updates. GraphQL is also designed for integrating API results from multiple sources allowing aggregate and/or cross-domain application logic. Additionally, Neo4j has also just release the Kafka CDC Source Connector that allows for real-time transactional pushes to your enterprise environment (e.g. develop new graph features instantly pushed to the enterprise.)

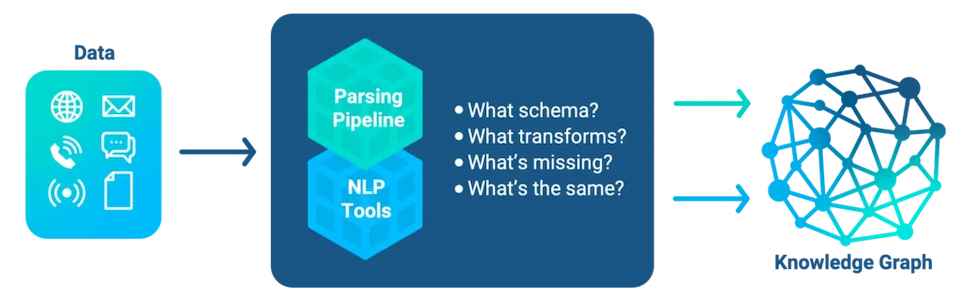

Natural Language Processing / Knowledge Graphs

This data services offering integrates your unstructured content (e.g. customer feedback/interactions, financial reports, healthcare/medical records, social media and other sources) as a basis for business analytics. This solution is driven off of existing ontologies or used to define a customized one based upon your business cross-domain data assets. These Enterprise Knowledge Graphs can then be integrated with external resources to develop new metrics (e.g. sentiment measurement, ESG metrics, topical news and media mentions.) Initially these offer durable and measurable KPI's and also represent the basis for Graph Data Science projects to further make connections across internal and external data sets.